Approaches to Sound

What is sound? If a tree falls alone in the forest, does it make a sound? The latter question comes from a philosophical tradition of empirical idealism, which claims that the reality of the world is just our perception of the world. We can follow this tradition and describe sound in terms of what we hear. Or we can insist that sound is a kind of physical phenomenon, regardless of whether we can hear it or not. Alternately, as we will see, we can think about sound in the abstract, independently of both our perception and the world. All of these approaches have something to offer us, but they are not equivalent. While they are partly complementary, they are not always compatible, either. For the creator, adopting a mode of thinking about the world should be a conscious choice. Unfortunately, most introductory technical treatments of sound follow one or more of these paths implicitly, treating philosophical questions—which artists are better equipped to handle—as if they were empirically settled matters. We may not answer any of the core questions of philosophy, but in order to work with sound, we have to have a working idea of what sound is, or what we wish sound would be, or what we find important about sound. We can be conscious of these ideas or not, but either way they will make themselves present in the work.

Physical Interpretation

Let's first go over the most common way to define sound: sound is the motion of a longitudinal pressure wave in air or some other compressible medium. Studying sound in this way is called acoustics.

Air, or any other gas, consists of billions of individual molecules, all of which are in random motion, colliding with each other and with any objects which happen to share their space. The force with which they would strike objects in a space is the pressure of that space, and this depends on temperature (how energetically the molecules are moving, on average), and the density of the molecules (how many molecules are in a space of a certain size).

Air has a certain default, constant pressure. Anything that pushes against the air moves more molecules into a smaller space, creating a tiny place where there is more pressure. This is called compression. These molecules have to come from somewhere, and so that same motion will create a tiny place behind the pushing object where there is less pressure. This is called rarefaction. Areas with higher pressure will quickly dissipate that extra pressure to the surrounding air, while the air around lower pressure areas will quickly rush into that lower pressure and boost it back up. The result is that the tiny pockets of high and low pressure will travel outward from their source, without the air molecules themselves moving much. This is a sound wave. It travels from its source to its destination (we also say it propagates from its source to its destination) at a constant rate, the speed of sound in air. Because the areas of compression and rarefaction are happening along the same direction as the sound wave is moving, we call this a longitudinal wave.

There are many more things to say about the motion of sound waves, conceived as pressure in air. But we will leave those for later essays in the series. For the moment, let's consider the boundaries of this interpretation of sound.

A sound wave, first of all, comes from somewhere. While it is possible for chemical reactions within the air to change its characteristic pressure and create sounds directly—this happens with combustion, for example—most sounds start with vibrating objects, such as a steel string or a speaker cone. These objects push and pull at the air, which then creates the characteristic pressure waves as described above. Different objects have different characteristic motions, which in turn creates different types of motion in the air. Generally objects will vibrate any way they can. A fixed string, for example, can vibrate with linear motion (the string as a whole bowing out and then back along some plane), but it can perform this motion simultaneously in both the directions in which it is not fixed, and so have a circular or ellipsoid motion. In addition to this, a displacement on one side of the string (for example, the side which is struck with a pick) will travel up the string until it hits the point at which it is fixed, bounce back to travel down the string, etc. Further, as we will see later, the whole string will vibrate, each half of the string will vibrate, each third of the string will vibrate, etc. This complex, three dimensional motion creates pressure waves in the air in three dimensions. While we understand the simplified cases of the motion of pressure in air very well, and while most of the time these simplified cases are excellent approximations for the real physical phenomena, the actual motion can be very complex.

According to one way of thinking about sound, there is no sound on the string itself, but these vibrations only become sound when they reach the air. The problem, however, is that sound waves can travel in many different media. In water, for example, because it is a liquid and much more difficult to compress, a much smaller change in density results in a much higher change in pressure, and sound waves travel at a much higher speed. But sound also travels from the strings to the wooden body of the instrument, which vibrates as the sound moves through it. Plate reverb essentially consists of small speakers that put a sound wave on to a metal plate, in which sound travels relatively slowly and reflects very efficiently. With bone conduction headphones, the sound is converted to vibrations in our skull, which are then directly coupled with our ear, in which case there is a sound that is produced and heard without there ever having been a sound wave in the air at all.

For some of these media, the pressure difference in air leads to a similar longitudinal wave in the other medium, which might then produce another pressure difference in air. For other things, such as sheets of metal or wood, the sound wave travels as an up and down displacement that travels along the surface—known as a transverse wave—which creates a force dependent on the elasticity of the medium rather than a change in pressure. While fluctuating voltages are usually thought of as a representation of sound rather than sound itself (this is the “analogy” in analog), arguably the motion of electrons in a wire is more akin to to the motion of sound in air than is sound traveling through a sheet of metal. When sound becomes electricity with a condenser microphone, one charged plate is moved towards another. This causes the electrons in the plate to become closer together, which creates a kind of electrical pressure that we call voltage. The above pictures could just as easily represent the increased and decreased density of electrons in a voltage waveform.

Psychoacoustic Approach

So if all of these rather different physical phenomena could be considered to be sound, what is sound then? Another way to answer this question is to say: sound is just what we, as humans, hear. Studying sound in this way is called psychoacoustics, although there are some related fields, such as otolaryngology (the study of ears in medicine), which we will look at under this same category. There are probably just as many books about sound which begin with a description of the human ear as with a description of sound waves in air, so let's explore that approach. As with physical acoustics, there is much, much more to be said about psychoacoustics than I will say here.

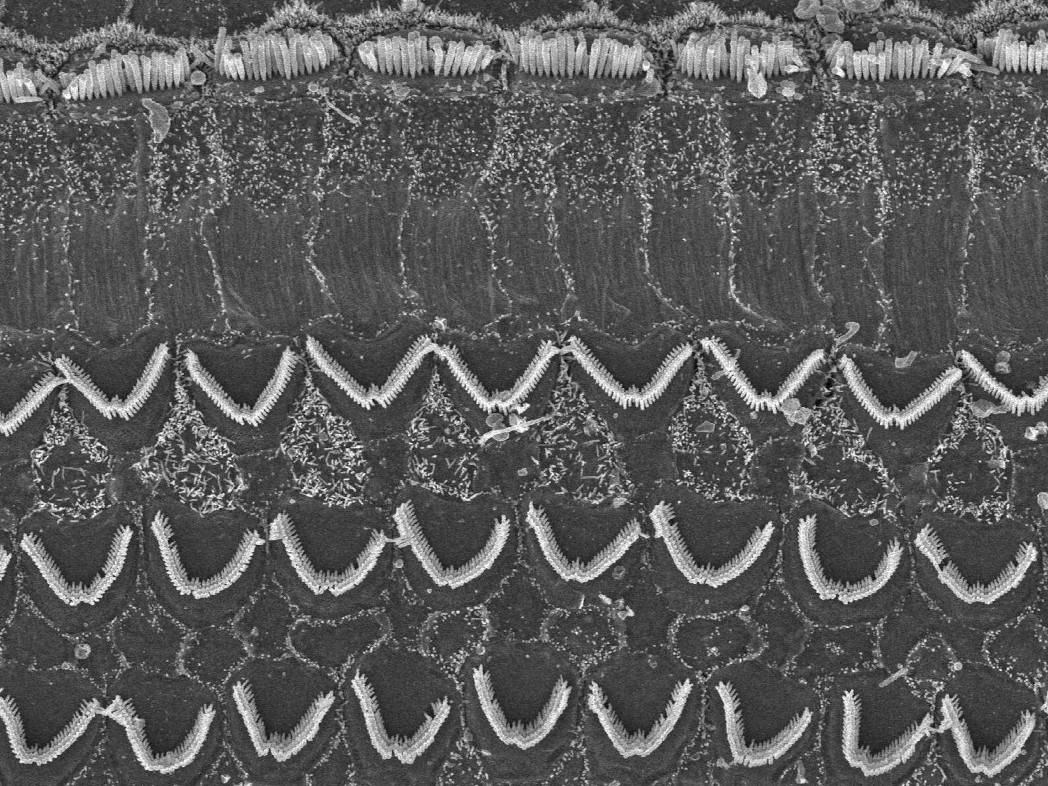

Our ear is an exceedingly complex device which we do not yet understand completely. It consists of various membranes and bones that transfer sound into motion within a spiral area filled with fluid, known as the cochlea, named after “snail” in Latin due to its resemblance to a snail shell. This spiral has different diameters, mass, and stiffness, such that different frequencies are emphasized at different points of the spiral. It is lined with about 20,000 sensory cells that resemble tiny hairs, which collectively analyze a sound and translate it into nerve impulses.

In the past, we understood hearing as a fully passive activity. Often, psychoacoustic treatments of sound still present hearing in this way. In this story, the different frequency sensitivities of the cochlea cause different parts of the cochlea to vibrate with different frequencies. In turn, this causes different hair cells to vibrate, which sends impulses on different nerves to our brain, which then interprets the information. Sounds are sorted by frequency and then produce impulses, telling us which frequency nerve was triggered, and giving us a sort of spectrogram of the sound.

Despite how often it is repeated, this story has been fishy for a long time. There have been many psychoacoustic experiments with participants listening to sounds at two frequencies and seeing whether they could tell them apart. These experiments discovered to a high degree of confidence that our discrimination between two different frequencies is incredibly good. So much so that it is an open question whether our hearing or the way our brain interprets what we hear is the limiting factor for these tests. The trouble is that the model of the ear as a passive device has very wide frequency bands, and this would lead us to think that we were incredibly bad at discriminating between several nearby frequencies.

Something else must be happening. It turns out that the ear is not just sitting there; it's doing things to selectively amplify particular frequencies, with feedback from the brain. While the idea of the ear as an active device was proposed as early as the 1940s, this was not verified until the 1960s, and not really explained until the last 20 years. We are still working on that explanation, but it goes roughly as follows:

The inner hair cells which sense sounds are accompanied by outer hair cells. These cells are tuned to a certain frequency such that they actively amplify motion at that specific, narrow frequency. When they are not active, the frequencies in that tiny range are relatively quiet. This tunes the inner cells to be very selective. These inner cells themselves do not just give a vague signal that sound is present. They fire at the peak of each waveform, translating the wave itself into a series of electrical pulses that travel through the brain with the same frequency as the sound wave that hair cell is receiving. What we don't know yet is what determines when outer hair cells actively amplify certain frequencies rather than others. But given that the outer hair cells are coupled with nerves coming from the brain, it is likely that there is some form of direct control of the type of spectrum which is produced based on higher level processing. That is, when we listen closely to a part of a sound, we may be literally changing the spectrum that we hear.

But even if this were not the case, in our artistic practices we frequently alter sound to make things audible which we would not otherwise be able to hear, like altering the pitch of a sound outside of the normal range of hearing, using a parabolic microphone to selectively amplify sounds at greater distances than we can hear unaided, or filtering sounds in a way that emphasizes parts that we may not have heard immediately. Even where we are limited as humans, the tools with which our culture provides us enhance our abilities, and we become more than the human template. As Donna Haraway puts it, we are already cyborgs. A modular synthesizer is a microscope for the ear, creating and uncovering new relationships between sounds as a process of discovery. What we need is cyborg psychoacoustics, not human psychoacoustics.

Nor, of course, is there only one kind of human. People with limited hearing or without hearing nevertheless live in a world in which sound is present, and they observe that sound. Just as with hearing people, there is a wide variety of experiences and abilities among those without hearing. Generally those without hearing observe sound through the way vibrations resonate and feel differently on different parts of their body, often using an object which exaggerates those vibrations, such as a cup or a balloon. Although it is rarely taught, one can learn to discriminate pitch reasonably accurately through these vibrations.

Sound is not an experience. Sound is something that we are trying to discover. When we define sound experientially, it is difficult to accommodate this process of discovery. After all, the sum total of what we are able to discover about sound doesn't look like a psychological phenomenon. It looks more like acoustics, or physics, or music, or mathematics. If experience is discovery, there is no longer such a clear separation between a scientific and an aesthetic point of view.

Sound as an Abstraction

In physical sound, the pressure waves in the air can create and be created from pressure waves in water, tension waves in strings and wood, and even, by pushing on a charged plate, or by a charged plate pushing against the air, electrical waves in a wire. Sound is not any one of these phenomena, it is all of them. But it is not the objects—air, wood, electrons, etc.—or the forces—pressure, tension, voltage, etc. Sound is an abstract phenomena that travels through all of these things. Sound is a wave. Each of these objects and forces can be the medium in which that wave travels, just like a written word can travel on paper, through the phosphors on your screen, etc., without fundamentally changing what writing is.

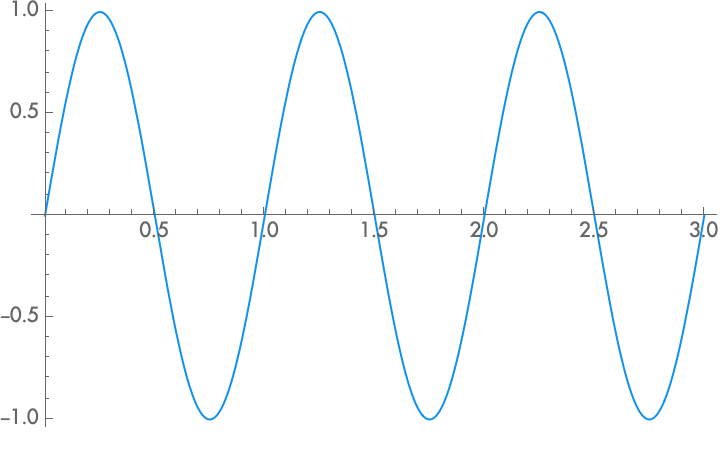

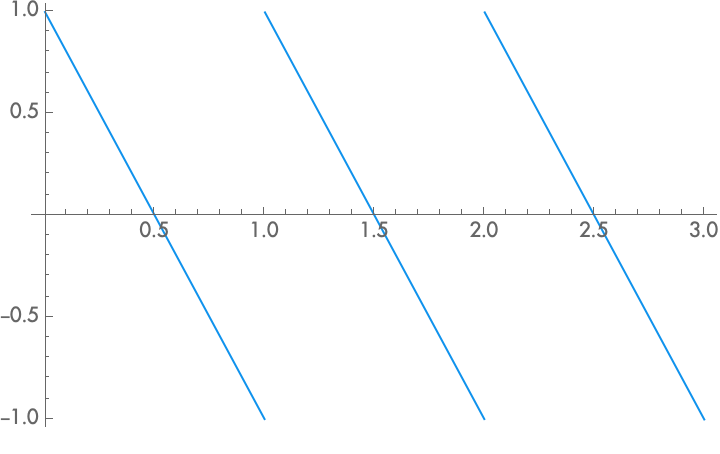

In its most basic sense, a wave is just repetition. Something measurable begins in a certain way. There is a certain amount of pressure, or a certain displacement of a section of string, etc. And then it changes. The pressure recedes, or the string moves in the other direction. But it returns to that beginning, over and over. A wave thus involves two variables: time (when changes happen), and whatever measures the wave in that medium (the changes themselves). We call the value of the measurement the amplitude, and generally we measure it from some theoretical central resting point.

This measurement can be complex. For example, sound in air will create areas of lower and higher pressure that travel in three dimensions. The complexity doesn't even have to be homogeneous. For example, the motion of the diaphragm of a relatively sealed condenser microphone builds both electrical pressure (voltage) and air pressure at the same time, both of which could contribute to its directional character. Sometimes this complexity is important. For example, the complexity of reverberation in certain spaces depends on the multiple paths sounds can take between source and listener in a three dimensional space. More often, reducing the actual motion to a single magnitude better represents the abstract nature of sound.

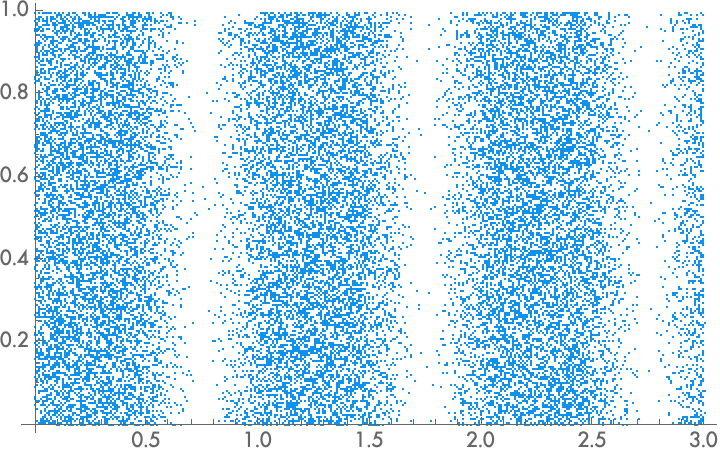

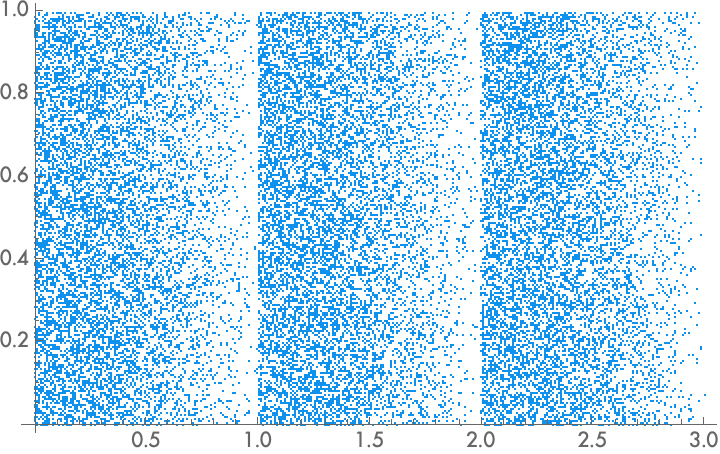

Imagine a fixed string vibrating in all its modes at once: along the string, and in a complex ellipsoid motion. The amplitude, properly speaking, will be a set of vectors extending along the whole length of the string indicating the displacement and the direction of displacement. But anything interacting with that string doesn't do so as a whole, but according to the way it is coupled with the string. For example, the bridge on a violin sits under a part of the string, and makes the tension of the string press into the wood. Changes in that tension will move the bridge, which in turn will move the wooden top of the violin, causing it to resonate. This resonance will cause the sound wave to move through the air. In all of these couplings, while it might be the case that certain kinds of motion are more closely coupled than others, what is most important is really just the change itself. One change is coupled to another as sound travels across different media. Consequently, when we speak of amplitude, we generally reduce it to the total changed magnitude, a one dimensional quantity. If you look at the graphs above, you'll see this reduction: a three dimensional physical sound wave (although only two dimensions are represented), and the corresponding abstraction, involving only amplitude and time.

Nevertheless, there is a tension here. If we reduce the physical objects in which sound exists to the media through which sound travels—that is, if we treat sound as an abstraction—then we risk losing some of the complexities of sound as it really exists in the world. On the other hand, if we refuse to abstract, if we treat the real complexity of sonic phenomena as unsimplifiable, we will have difficulty explaining what is common between the motion of a string, the motion of the wood, and the motion of the air. But it is difficult to say just where this common abstraction is. Is the abstraction of repetition a fundamental object of the universe? Is sound a fundamental building block of reality? Or is the sound abstraction just something human, just a way we have to group together various phenomena? Generally people give the latter answer, but consider that it might be more than just us humans seeing common patterns in disparate phenomena. In a violin, the motion of the string, the wood, and the air really are coupled together, which is to say, through physical interaction, these three different media work to create and transmit a single thing: sound. How could that work if sound didn't exist as a real abstraction apart from the motion of each of those media?

Approaches to Sound, Approaches to Synthesis

Looking at sound from a distance, what we hear is basically the physical sound, and the physical sound is basically characterized by our abstract description of it. But only from a distance. These three things are not actually quite the same, and choosing between them has real consequences for our art. While for a lot of music this is a subtle point, for synthesis this has been a central point of contention since its creation: what is synthesis synthesizing?

First, synthesis might be synthesizing sounds according to the properties of physical instruments and spaces. If this is the case, then you want instruments which let you quickly make decisions based on characteristics that are commonly found in natural settings. While in its least adventurous form this approach just replicates the sound of existing instruments as exactly as possible, it can also be used to combine different physical models to create new kinds of sounds.

Second, synthesis might be synthesizing sounds according to how we hear. In this case, we are going to make sounds that address psychoacoustic questions, like what kind of motions between frequencies seem equally spaced? What kind of motions between soft and loud sounds seem equally spaced? Which harmonics are most important in identifying a type of sound?

Last, synthesis might be synthesizing sounds according to their abstract characteristics. In this approach, while you may hit the simple laws of psychoacoustic and physical relationships, that's not really the point. Sounds are created from certain building blocks, with a preference for simplicity, elegance, and musicality. The only rule for creating relationships between all of these building blocks is abstract musical need.

(By the way, I don't claim to be unbiased in this presentation. It is probably already clear that this series, and New Systems Instruments modules more generally, take this latter approach.)

None of these approaches to sound are bad, but just as much, none of them are definitively correct. And yet, they are also unequal. We can build an approach with parts of more than one of them, but the eclectic approach is still just another approach. So how do we choose our approach?

Any commitment to an idea will involve stakes of various kinds: aesthetic, political, emotional, etc. In any practice, you will have to think about what's important for you and develop your approach accordingly. For example, on the one hand you might feel like this world spends enough time treating humans like machines, and with a psychoacoustic approach to sound we can recenter our own being as human subjects, not just cogs in a mechanistic worldview. On the other hand, you might feel that the psychoacoustic approach privileges a certain kind of human subject, ignoring nonhumans (why not make music for your dog?), but also erasing the variances within the human species itself—how do people with cochlear implants experience sound? Then again, it turns out that psychoacoustic studies on questions like these do exist, although they generally don't make it into introductory textbooks. So maybe the psychoacoustic approach helps break down these same implicit assumptions that are hiding in our abstract concept of music and sound.

What is most important and how to choose it can't always be clearly articulated. You simply have to navigate this question as best as you can. The only clearly wrong approach is to decide that nothing is important, and that you aren't responsible for your choices. In other words: be conscious. Whether you think about them or not—and thinking intuitively is still thinking—these stakes will exist, and you will make choices, and these choices will affect and inform your art.

But just because we don't have clear answers doesn't mean that our answers are subjective or personal. What's important is important in part because it has consequences out there, in the objective world. We support and critique each other on the path to knowledge. No one can decide for you, but you don't have to decide alone.

References

The question about a tree falling in the forest has its origins in Western hyperempiricist, idealist philosophy. There are similar questions in the work of Berkeley. We also find these ideas in the early 20th century philosophical movement which grew alongside the revolutions in physics (quantum physics and relativity), typified by Ernst Mach and Richard Avenarius. For a somewhat crude but useful refutation of these idealist works, see Lenin, Materialism and Empirio-Criticism.

A good survey of recent work on the selective amplification of the ear can be found in an article by J. Ashmore, et al: “The Remarkable Cochlear Amplifier,” in the journal Hearing Research (no. 266, 1–2, 2010).

Donna Haraway writes about the relationship between subjectivity and technology in her “A Manifesto for Cyborgs,” published in the Socialist Review journal (no. 80, 1985: pp. 65–108). It has been reprinted in many other collections and can easily be found online.

The emphasis on listening vs. hearing is part of Pauline Oliveros' deep listening practice. From decades of practice encouraging her participants to truly, actively attend to the sounds around them, Oliveros develops techniques to help us develop these listening skills. Some of Oliveros' writings can be found in Deep Listening: A Composer's Sound Practice.

The idea of a really existing abstraction generally follows the Hegelian tradition, and while I think Hegel goes too far, he is on to something here. The phrase itself comes from Alfred Sohn-Rethel, in Intellectual and Manual Labor, although he uses it in a somewhat different sense than I do.

A good article on musical hearing for those with cochlear implants is “Music Appreciation and Training for Cochlear Implant Recipients,” in the Hearing journal (no. 33 (4), Nov. 2012).

Christine Sun Kim is a nonhearing artist some of whose work addresses her experience of sound. Evelyn Glennie, an accomplished percussionist, has spoken about her experience learning to listen while deaf in a talk entitled, “How to Truly Listen.”

The image of the interior of a cochlea is CC-BY 4.0 from Andrew Forge, and the image of the entire cochlea plus the close up of the hair cells is CC-BY-NC 4.0 from David Furness. Thank you for making your images available!